Get Started

🐂 What is Oxen?

Oxen.ai is a developer platform for fine-tuning and evaluating models on your own data.

Oxen.ai gives you the tools and infrastructure to build the best model for your use case.

The platform makes it easy to spin up serverless GPU infrastructure to train models or run inference. When you are done, the datasets, models weights, and code are all versioned and stored in an Oxen.ai repository for easy iteration, comparison, and collaboration.

Oxen.ai gives you the tools and infrastructure to build the best model for your use case.

The platform makes it easy to spin up serverless GPU infrastructure to train models or run inference. When you are done, the datasets, models weights, and code are all versioned and stored in an Oxen.ai repository for easy iteration, comparison, and collaboration.

✅ Features

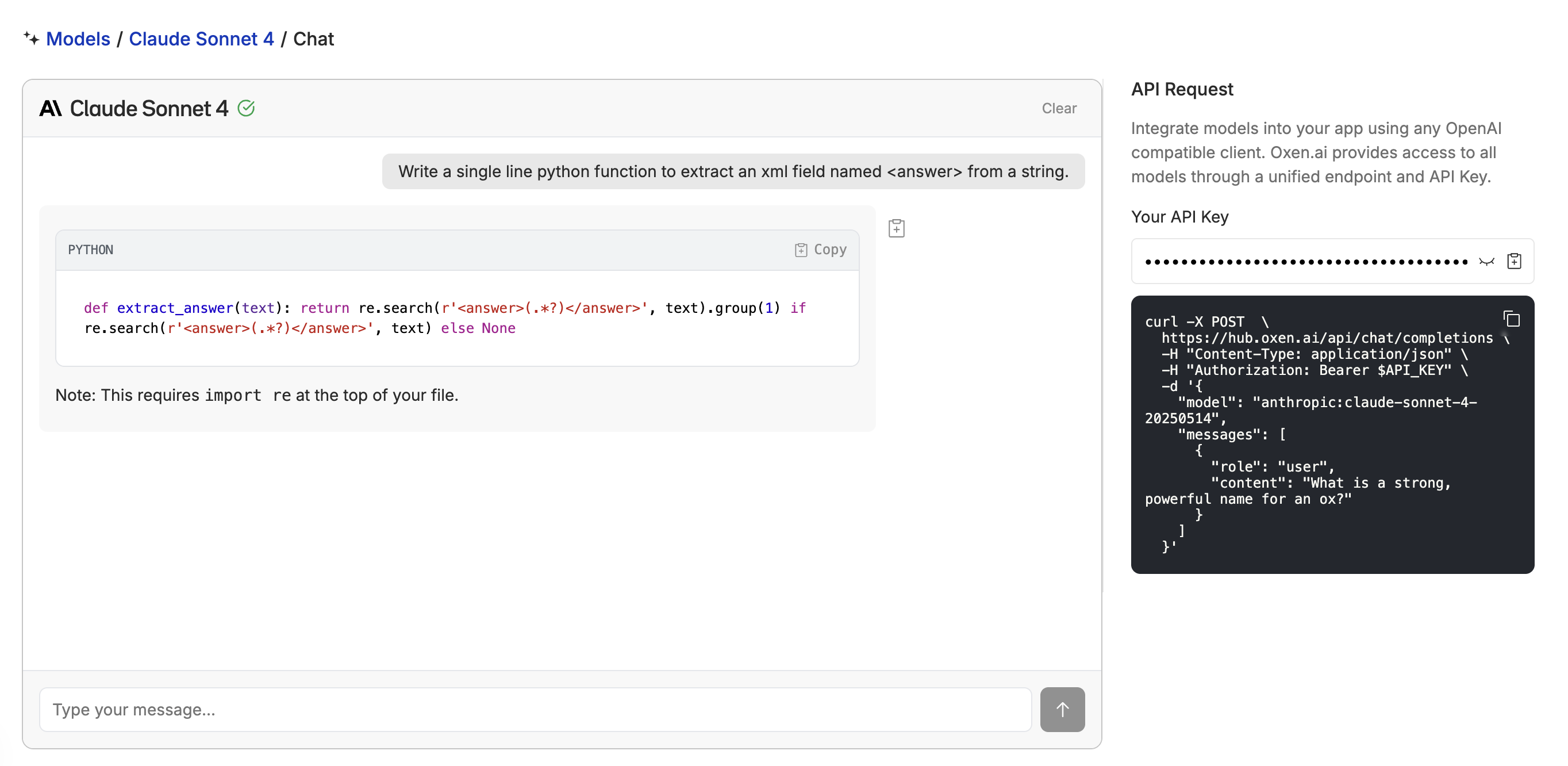

Whether you are iterating on your prompts while using a closed source model or fine-tuning an open source model, Oxen.ai gives you the tools to focus on what matters most: building great models.- ⚡️ Inference - Quickly iterate on prompts and models

- 🚀 Fine-Tuning - Go from dataset to deployable model in a few clicks

- 📊 Datasets - Build datasets for training, fine-tuning, or evaluating models

- 🔬 Evaluation - Find the best model and prompt given your dataset and use case

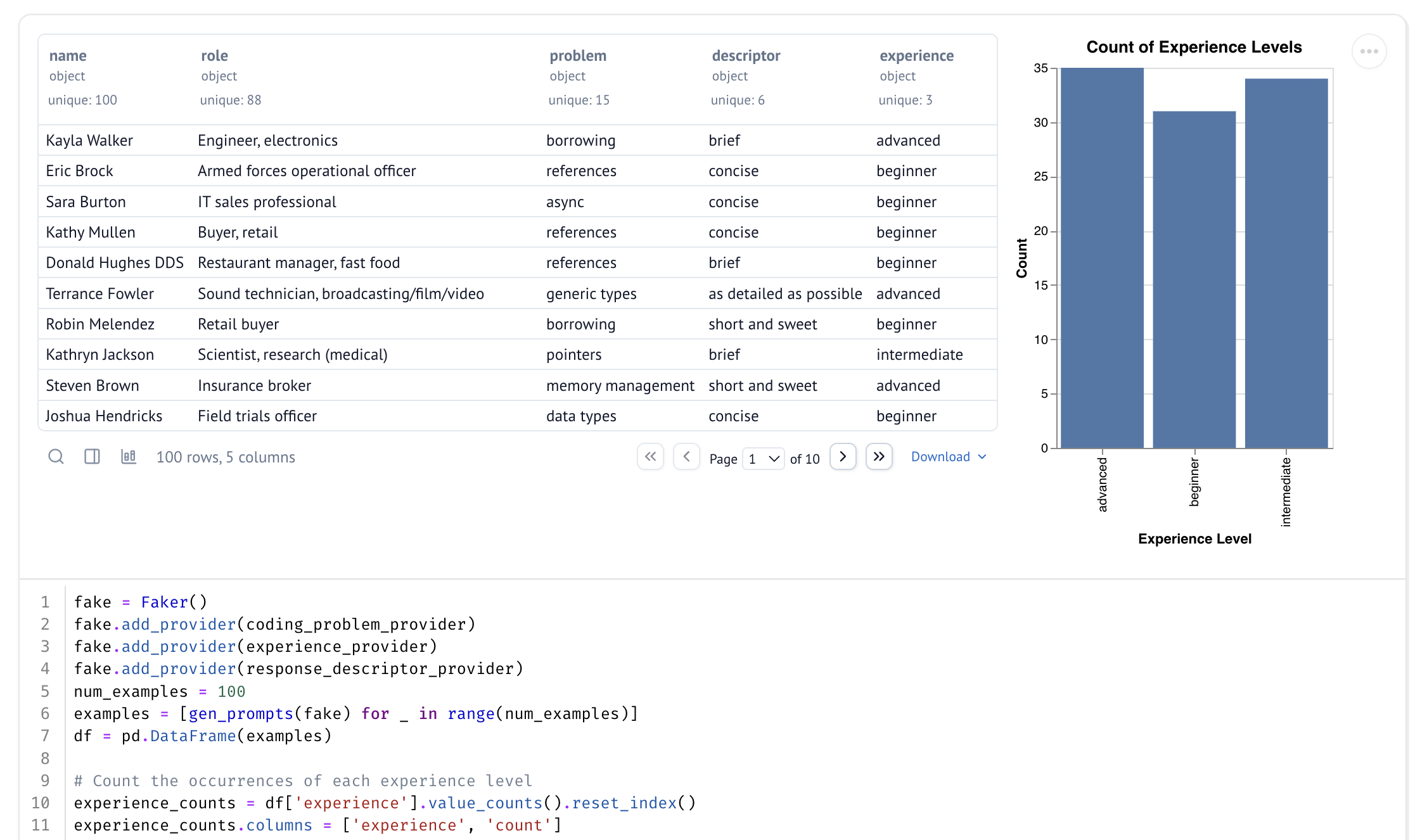

- 📓 Notebooks - Write custom code to interact with your datasets and models

- 💾 Version Control - Sync your datasets, model weights, and code with a collaborative hub

⚡ Quickly Iterate on Models

Whether you are making your first LLM call or need to deploy a fine-tuned model, Oxen.ai gives you the flexibility to swap models through a unified Model API. The interface is OpenAI compatible and supports foundation models from Anthropic, Google, Meta, and OpenAI. See the list of supported models to get started. Closed source models not working for your use case? Fine-tune your own model, optimizing it for accuracy, speed, or cost, and deploy it to the same interface in minutes.

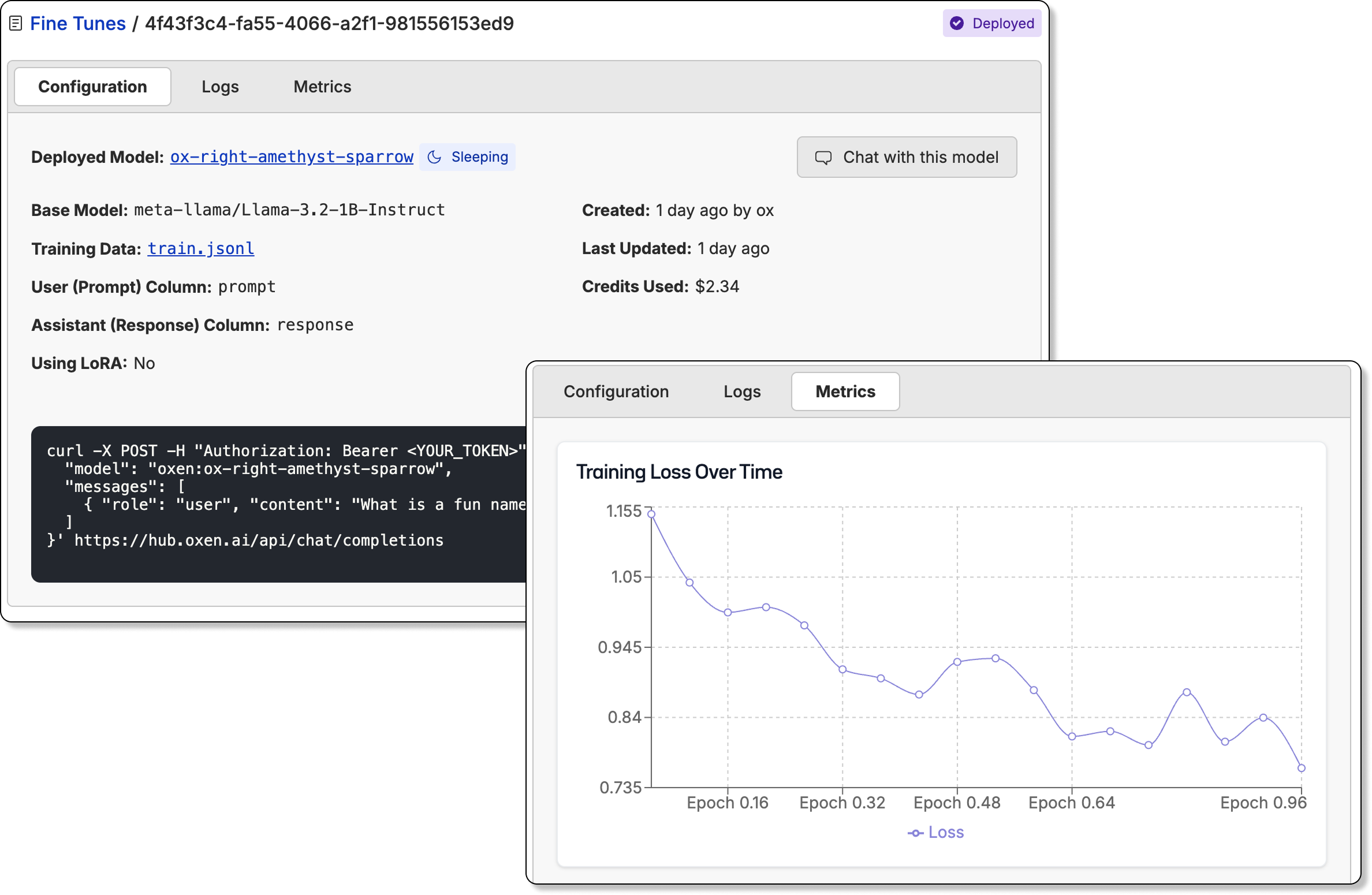

🚀 Fine-Tune Models

The best models are the ones that understand your context and continue to learn from your data over time. Go from dataset to model in a few clicks with Oxen.ai’s fine-tuning tooling. Select a dataset, define your inputs and outputs, and let Oxen.ai do the grunt work. Oxen saves model weights to it’s version store tying model weights to the dataset and code that was used to train them. Once the model has been fine-tuned, you can easily deploy the model behind an inference endpoint and start the evaluation loop over again.

Once the model has been fine-tuned, you can easily deploy the model behind an inference endpoint and start the evaluation loop over again.

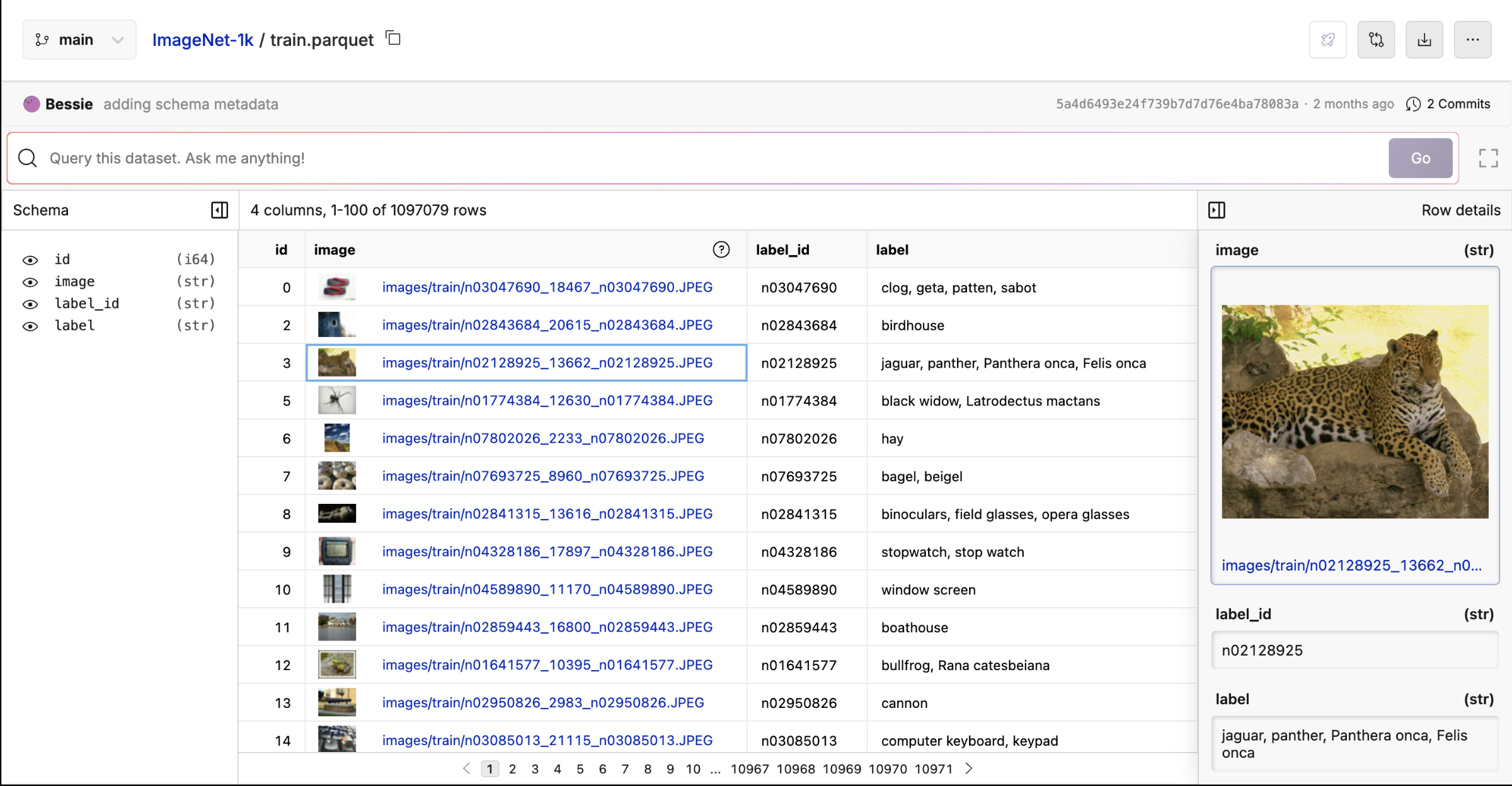

📊 Build Datasets

Quality datasets are the difference between prototypes and production models. Collaborate on multi-modal datasets used for training, fine-tuning, or evaluating models. Backed by Oxen.ai’s version control, you’ll never worry about remembering what data a model was trained or evaluated on. Learn how to interface with datasets in the Oxen Python Library or more about supported dataset types and formats here.

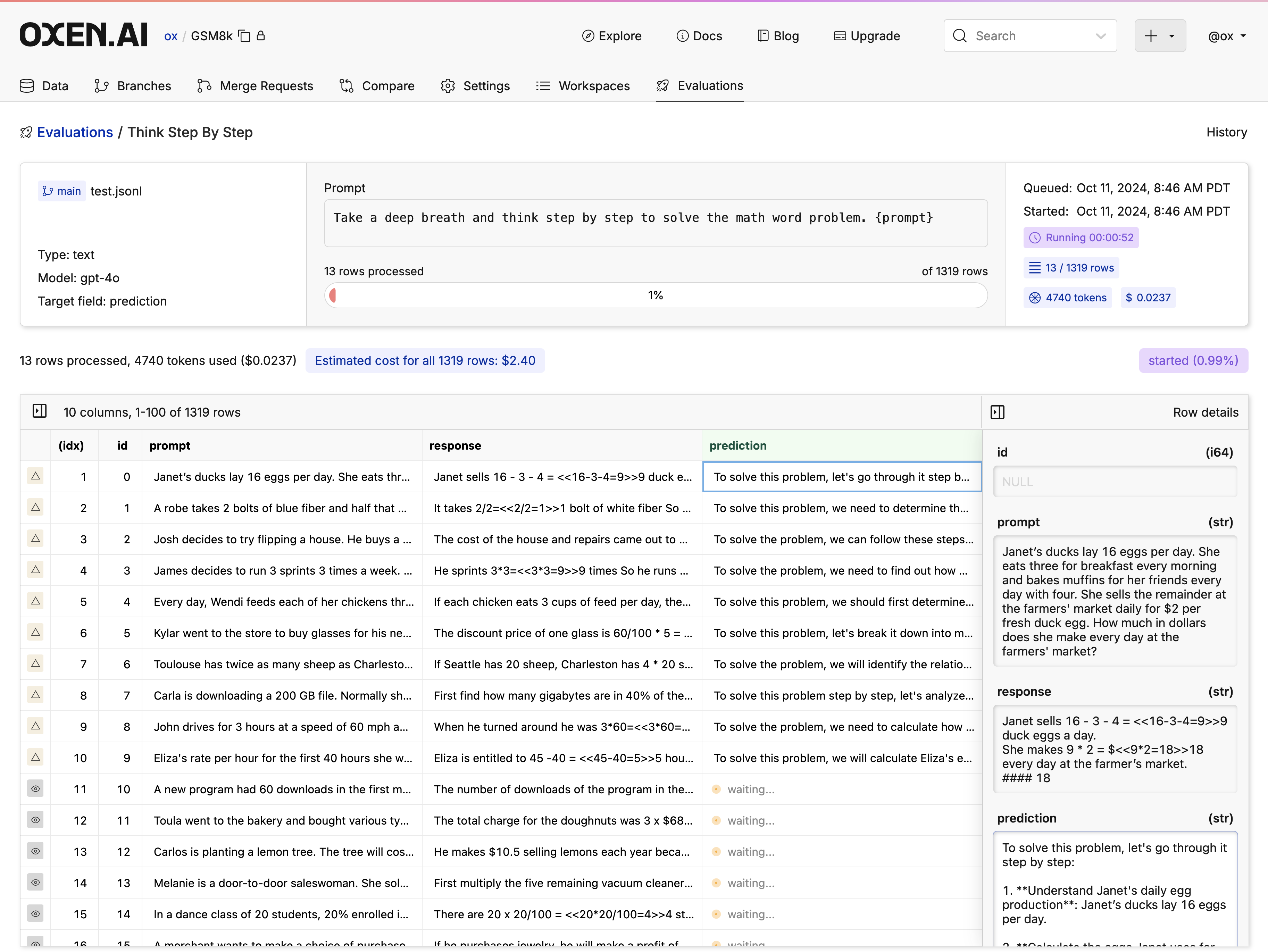

🔬 Evaluate Models

Find the best model and prompt for your use case. Leverage your own datasets to build custom evaluations. Evaluation results are versioned and saved as datasets in the repository for easy performance tracking over time.

📓 Write Notebooks

Spin up a Marimo Notebook on a GPU or CPU in seconds. Use the Oxen Python Library to interact with your datasets and models. Write custom code to process your data, visualize distributions, compute metrics, and even train models.